Redis is an excellent database for apps that require a sub-millisecond response. Even though the database is very performant, there are a few gotchas… As your dataset grows, you can face issues that take much time to resolve (especially if you don’t know where to look). One such example is the HotKeys and BigKeys issues. Let me explain how to fix it.

There is a problem…

Your database is running slow, and you see latency/CPU spikes.

(Typically after your database grew to hundreds of thousands of keys.)

What’s happening?

Well, you might be facing the issue of HotKeys or BigKeys (or both if you are unlucky enough).

Why do such issues exist?

There is one rule of thumb in Redis. One shard can handle up to 25GB of data and up to 25k operations per second (ops/sec).

Now…

You might have hundreds of shards (even thousands) depending on your database size.

These shards TOGETHER can handle millions of ops/sec and store billions of keys…

But how is this load distributed PER individual shard?

Does it violate the rule of thumb I mentioned above?

Both HotKeys and BigKeys usually violate the single-shard capacity (and then your entire database suffers).

These can be extremely hard to identify, especially in larger deployments (spanning hundreds of shards).

Still, there is a simple way of fixing the issue.

Let me tell you more.

HotKeys in Redis

HotKey is a key that is frequently accessed (compared to other keys).

(Think of metadata and configuration that your app needs very often…)

A single key will always reside on a single shard (hence, the 25k ops/sec shard capacity applies).

Does your app request a particular key more often than 25,000 times a second? If so, the shard where the key resides will likely have a high CPU ( say, above 80%).

Other requests (that depend on this key) will drag due to the shard slowness, and your entire database will experience high latency…

No one wants to see this happening…

(It’s an absolute No-No.)

But how do you fix this issue?

Well, you have to identify it first…

How to identify the HotKeys issue?

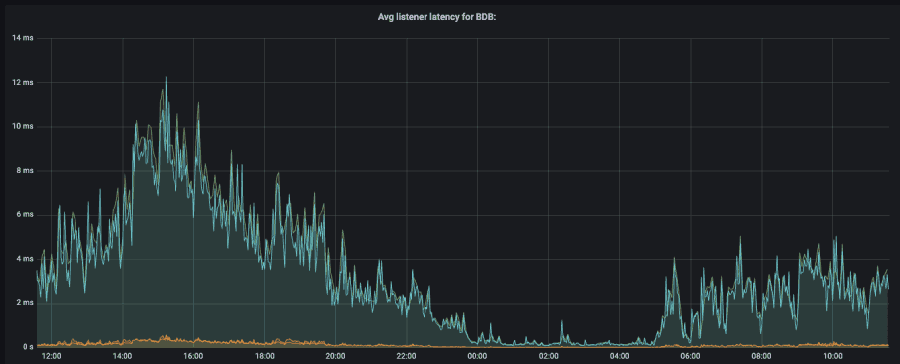

First of all, you will know that something is wrong…

Your database will start dragging, and you will see higher than usual latency (especially in peak traffic hours).

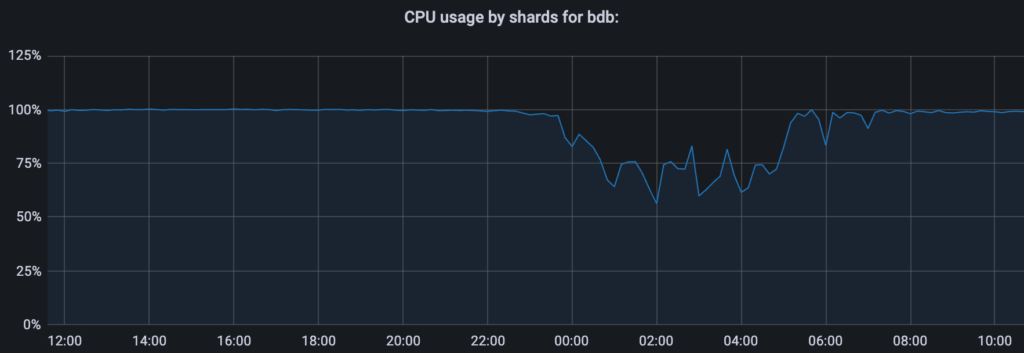

If you look at the CPU of each individual shard (for example, from Prometheus/Grafana), you will most likely see something similar to this…

The problematic shard in this example is hitting 100% CPU (in the peak hours)… The shard is basically on its knees, and the entire database suffers (high latency)…

These graphs indicate that something odd is happening with the shard in question. Is it the hotKeys issue?

Let’s find out…

Steps to check for the hotKeys issue

You can run a command to scan for the keyspace for the hotKeys.

Before that, you must set the eviction policy of your database to allkeys-lfu (this action does not impact your database but only what happens when you hit the memory limit).

Now you are all set…

Run the command below from your redis-cli.

redis-cli -h redis-xxxxx.xxx.us-east-1-4.ec4.cloud.redislabs.com -p 12514 -a <password> --hotkeys

(Change <password> with your DB password.)

You will get an output similar to this.

-------- summary -------

Sampled 27 keys in the keyspace!

hot key found with counter: 7 keyname: "room:1:3"

hot key found with counter: 6 keyname: "user:2:rooms"

hot key found with counter: 3 keyname: "user:1:rooms"Code language: JavaScript (javascript)There you have it… Several keys are found that fall under the hotKeys label…

NOTE: The hotKeys scan returns a maximum of 16 hotkeys (this is the code line responsible for it).

TIP: You can also use the OBJECT FREQ command to approximate how frequently the key is accessed (99 is the highest value you can get).

But what to do now?

Well, now you have to check on what shard those problematic keys reside…

Key to shard mapping

Depending on your deployment (e.g., Redis Cloud), you must ask the support team to run the query for you because you need access to the shard-cli.

Here is the command you (or the support team) should run in the shard-cli dialogue.

<shard-no> EXISTS <key-name>Code language: HTML, XML (xml)(For example, I would run 1 EXISTS room:1:3 to check if the key room:1:3 is stored on the shard with ID 1…)

Return 0 means that the key is not on that shard, and 1 means it is.

If you don’t have access to the shard-cli, there is also a faster way to determine where the key “would” reside based on its name (this is called hashing in Redis).

Note: this guide applies only to the default/standard hashing policy.

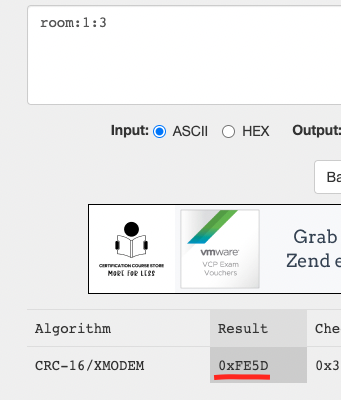

Go here and enter the key name that you are interested in (in my case, it’s room:1:3).

Copy the hexadecimal from the Result column as shown below.

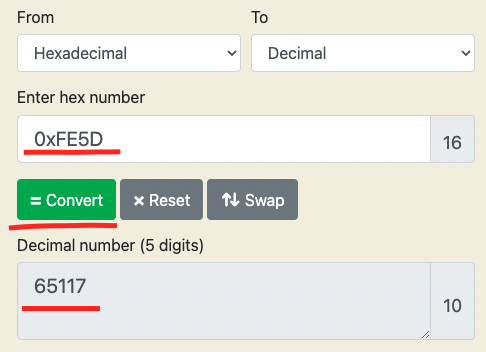

Paste that number into the calculator here and press Convert (Enter hex number field).

Now you have to use the modulo calculator from this link.

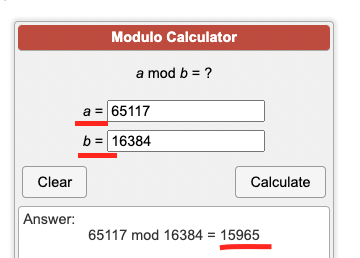

In the calculator, enter the decimal number from the previous step in the a) field and enter 16384 in the b) field.

Here is what my calculator looks like (for the key room:1:3).

This is the number I got back.

15,965

But what is this number?

Keyslots and shards

Every key in Redis is stored in a key slot. Redis has 16384 key slots that can be distributed across many shards (if you have only one shard, all 16384 key slots are there).

Similarly, if your DB has 2 shards, each will host ± 8k key slots… If you have 4 shards, each will host ±4k key slots…

So, in my case, the number 15,965 refers to the key slot where the key resides…

But on what shard does that key slot reside?

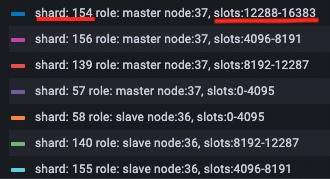

The most user-friendly way to check for this is from your Grafana dashboard (if you have the Prometheus integration).

Here is a snapshot (any metric that concerns shards will have the slots mentioned).

Where is the 15,965th key slot stored?

It’s stored on the shard ID 154… That said, my hotKey room:1:3 lives on the shard 154…

Note: you can find a key slot to shard mapping from the CLUSTER KEYSLOT command (if enabled on your deployment).

How to resolve the hotKeys issue (and avoid it further)?

You are almost there…

The big part of the job is already done, as you know what keys are causing the shard CPU to skyrocket…

Now it’s a matter of reducing the load on those keys.

For instance, the problematic key might contain a hash (or a list) that can be further decomposed and broken up into multiple keys.

(So, this also requires some minimal refactoring on your app side.)

Once you distribute the load across different keys, make sure to save those keys under the name that gets stored in a different key slot (and to a different shard).

To do that, just follow the calculator that I introduced earlier.

And that’s all there is to it — your app now works lightning-fast…

Unless you have an issue with the bigKeys (as well).

HINT

A nice way to monitor the frequency of access to a key that might be problematic could be this (in a human-readable way).

Let’s say I have a key called room:1:3. I can simply create another key called room:1:3:access_freq_last_5_min and set its TTL (time to live — expiry) to 5 minutes.

Now, everywhere in my app (where I get the key), I would also use the INCR command to increment the value of room:1:3:access_freq_last_5_min by 1…

Whenever you are interested to see how many times the room:1:3 key is accessed (over the last 5 mins), you simply GET room:1:3:access_freq_last_5_min.

BigKeys in Redis

BigKeys are the keys that consume a lot of memory — the keys that are huge in their size.

The rule-of-thumb recommendation of Redis is that a key is kept to less than 2MBs (ideally below 1MB).

If the key is a composite one (e.g., a list, a hash, etc.), the recommendation is to keep the number of elements to less than 10,000 (you can always brake it down to multiple keys).

Why do big keys cause a problem?

Like every other technology, Redis’s architecture has physical limits and specific recommendations (for the best performance).

Size-wise, the maximum recommended capacity of a single shard is 25GBs.

The bigKeys issue interferes with the recommended capacity of a shard.

In the extreme case, a single key can grow to consume the entire memory of a shard (yes, it can consume 25GB of data)…

So the bigger the key is, the less use you will get from the distributed architecture of Redis.

And why?

Because the key is becoming difficult to collect and move (because of its size).

In extreme cases, you won’t benefit from scaling out your database (by adding more shards) because a single key can reside only on one shard.

Imagine a key that is 25GB in size. Whatever number of additional shards you add to your database won’t impact this key… The key will remain on the same shard (or move to some other, but it will consume the entire shard).

(As a single key cannot be split across multiple shards…)

And, of course, as the shard becomes less and less performant, the entire database will suffer from the increased latency.

How to identify bigKeys?

Similar to what you did with hotKeys, there is a command that you can run straight from your redis-cli.

redis-cli -u <database-endpoint> --bigkeys -a <password>

(Don’t forget to fill in the database endpoint and the password.)

This command will scan the keyspace and return a summary that looks similar to the one below (it uses the SCAN command, so the performance of your DB is not impacted).

-------- summary -------

Sampled 13603604 keys in the keyspace!

Total key length in bytes is 289309504 (avg len 21.27)

Biggest string found 'xxx:xxxxxxxx:xxx:xx' has 406333 bytes

Biggest zset found 'my_key' has 23463221 members

0 lists with 0 items (00.00% of keys, avg size 0.00)

0 hashs with 0 fields (00.00% of keys, avg size 0.00)

10068308 strings with 4978088399 bytes (74.01% of keys, avg size 494.43)

0 streams with 0 entries (00.00% of keys, avg size 0.00)

0 sets with 0 members (00.00% of keys, avg size 0.00)

3535296 zsets with 29975463 members (25.99% of keys, avg size 8.48)Code language: JavaScript (javascript)In this particular example, a sorted set of my_key has 23 million members…

It’s already a good indication that there is a bigKey problem here.

Let’s find out more about the key size by running the next command.

redis-cli -u <database-endpoint> --memkeys -a <password>

And here is the output.

Sampled 13600987 keys in the keyspace!

Total key length in bytes is 289274852 (avg len 21.27)

Biggest string found 'xxx:xxxxxxxx:xxx:xx' has 217954 bytes

Biggest zset found 'my_key' has 19532877467 bytes

0 lists with 0 bytes (00.00% of keys, avg size 0.00)

0 hashs with 0 bytes (00.00% of keys, avg size 0.00)

10065961 strings with 5612029728 bytes (74.01% of keys, avg size 557.53)

0 streams with 0 bytes (00.00% of keys, avg size 0.00)

0 sets with 0 bytes (00.00% of keys, avg size 0.00)

3535026 zsets with 25361957109 bytes (25.99% of keys, avg size 7174.48)

Code language: JavaScript (javascript)So, the key my_key weighs 19,532,877,467 bytes which is ±18 GB…

There you have it…

But where else can you see the problem?

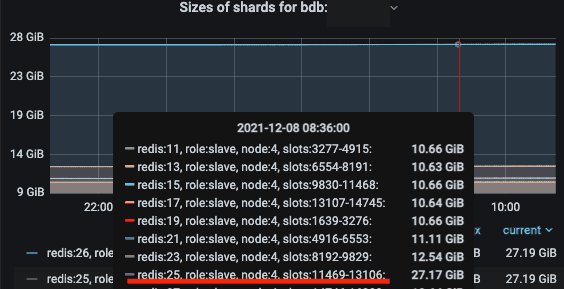

Again, if you implemented Grafana/Prometheus (here is how to do it in Redis Cloud), you will probably see it in the shard graphs.

In the snapshot above, shard ID 25 has 27GB of data, while all other shards are approximately of the same size…

Why is that?

Well, the Shard 25 has one huge key of ± 18GB that can move only in its entirety (not partially).

So, it’s a school example of how bigKeys can hurt your app.

How to resolve the bigKeys issue in Redis?

It’s simple…

Just break down the key into multiple smaller keys.

Try to follow the rule of thumb and have a single key below 1 MB and if it’s a composite (a list or a cache) under 10,000 elements.

Sometimes you will need keys that span megabytes (MBs), which is perfectly fine.

Still, monitor their growth carefully and raise a “red flag” on time…

Never let the key grow past 512MB (it’s a big NO-NO).

(If you require such functionality, your app architecture needs some work as this is against the standards.)

Final thoughts

Redis is an excellent solution if your app requires a highly-performant database.

(You really need to do many bad things to a Redis DB so that it brakes…)

Still, Redis has a few gotchas that can be hard to see and troubleshoot.

Here I showed you an example of two common issues — hotKeys and bigKeys.

Both of these issues are caused by violating some of the recommended principles for optimal performance.

(Almost always, you will see a higher latency when this happens.)

Thanks to this article, you can understand the problem and troubleshoot the issue yourself (or with Redis support).

In any case, you will resolve the hotKeys and the bigKeys problem before they make your DB suffer.

Hope this helps!

P.S. Check out my post on seamlessly migrating from Elasticache Redis to Redis Cloud Enterprise.

Full disclaimer: I work at Redis (the company), and this article reflects my personal view on the problem (based on my experience).

![How To Choose The Best Programming Language To Learn For Beginners [Programmer’s Guide] How To Choose The Best Programming Language To Learn For Beginners [Programmer’s Guide]](https://igorjovanovic.com/wp-content/uploads/2022/10/front_office_department-300x197.png)

![How To Start A WordPress Blog That Makes Money Even When You Sleep [2024] how-to-start-wordpress-blog](https://igorjovanovic.com/wp-content/uploads/2023/06/how-to-start-wordpress-blog.png)

![Migrate ElastiCache to Redis Cloud (Enterprise) [Without Downtime] Migrate ElastiCache to Redis Cloud (Enterprise) [Without Downtime]](https://igorjovanovic.com/wp-content/uploads/2023/07/Migrate-elasticache-to-redis-cloud-enterprise-1-1024x536.png)

2 replies on “How To Quickly Resolve HotKeys and BigKeys Issues in Redis (Cloud, On-prem, OSS)13 min read”

This is amazing sir, I will find time to go through it again.

Hi Aiah,

Good to hear back from you. Just keep learning and come back whenever you need it 🙂